During the last few days I’ve been working on moving the blog content to a simpler and self-hosted solution. Future posts will be available at rg3.name.

2012-10-06

2012-06-11

Things to know about leap seconds and your Unix system

Let me start with a small apology for not writing anything new in several months. It’s amazing it’s been so long since my last post. Anyway, June 30th is approaching quickly and this past week I’ve been in charge of reviewing the code in our ATC system regarding the possible operational impact that the next leap second will have for controllers. Fortunately, some changes had been introduced for the previous leap second in 2008 to fix a few problems that did appear in the 2005 one, and this means the impact should be minimal and it will still be safe to fly over the Iberian Peninsula during the leap second midnight. ;)

Seeing me work on that, a few colleagues and friends have asked me what the leap second thing is about and if there’s anything they need to know or do on their home systems to handle it properly. The short answer is “No”. Most people won’t need to do a single thing but I’m sure some of them would enjoy a longer explanation, so here it goes.

What is a leap second?

For humans and regarding this topic, there are basically two ways to count time. On the one hand you have systems which are based on the International System of Units. In these systems, a second is defined as a very specific amount of fixed time related to the number of periods of a specific radiation related to caesium 133 atoms. No more, no less. Some time scales, like International Atomic Time or GPS Time follow that definition and time, in them, advances at a fixed pace forward. On the other hand, we humans like to give our time scales an astronomical meaning in daily use. The Earth moves around the Sun and completes one lap in a specific period of time and we define the duration of one day and, for that, one second, regarding that movement. The UTC time scale, in which our time zones are based, works like that.

The problem with giving that astronomical meaning is that the time the Earth takes to go around the Sun is not constant, so the definition of one second according to those two scales doesn’t match exactly. But as human as we are, we decided to simplify things and define UTC time regarding a simpler, non-astronomical scale, like International Atomic Time. To preserve the astronomical meaning of UTC, then, once in a blue moon we are forced to have a minute with 59 or 61 seconds (until now, only the latter) just like from time to time the year needs to have 366 days. The moment these leap seconds are introduced is decided on the go by the International Earth Rotation and Reference Systems Service according to observations, but the event always takes place after or before 23:59:59 in June 30th or December 31st in a given year in the UTC time “zone”.

Since they were introduced, International Atomic Time and UTC time have fallen 34 seconds apart and, after June 30th, the difference will be 35 seconds.

How is a leap second introduced in theory?

In theory it’s very simple if we represent time as text or as a structure containing different pieces. If what we’re doing is adding a second, in the night of June 30th we’d have:

June 30th, 2012 23:59:57 June 30th, 2012 23:59:58 June 30th, 2012 23:59:59 June 30th, 2012 23:59:60 -- leap second here July 1st, 2012 00:00:00 July 1st, 2012 00:00:01 July 1st, 2012 00:00:02

How is a leap second introduced in practice, in a computer?

In Unix systems (and other types of systems as well) there are a lot of system calls and different APIs to handle time in different and flexible ways. Unfortunately, most of them were not designed with leap seconds in mind, specially the most interesting ones from a computational point of view because they are mainly based on Unix time. As we cannot easily represent going from 23:59:59 to 23:59:60 and then to 00:00:00, in most systems the leap second is introduced by advancing or putting back the clock one second at 23:59:59 like you’d do on a digital watch. So you’d be at the end of 23:59:59 and then you jump back to 23:59:59 again. Some other systems prefer to “freeze” the clock for one second so an application requesting the system time would not see a jump back in time between to consecutive system calls.

Anyway, it’s a bit easier to make leap-second aware those applications that need it than to introduce a new standard API that would take them into account and be used by everyone. Because, honestly, most applications don’t care about leap seconds and, for those that do, it’s not that hard to detect a leap second happened and handle the special case properly with a few lines of code.

Enter NTP

NTP or Network Time Protocol is a tool that can be used by computers to stay in sync and properly on time. Specifically, it allows to computers to talk and exchange information about what time it is allowing one of them, the client, to closely follow the time of the other one, the server, which is considered a trustworthy reference. This server in turn may be in sync with another more trustworthy time source, and so on. Obviously, this cannot follow indefinitely and at one point in time someone will have to trust a time source as being infallible.

NTP is needed because, even if computers have a precise internal hardware oscillator and clock, just like a normal watch it will go too fast or too slow. The NTP daemons running in the computer are able to measure this drift and inform the operating system kernel about it, so that system time advances at the proper pace and your clock stays in time.

The thing about NTP and leap seconds is that the protocol is leap-second aware. If a time source knows a leap second is about to take place, it can inform its clients about the upcoming leap second. These clients may in turn be servers to other clients, and they would propagate the leap second warning to them. The NTP daemon may inform the kernel about the upcoming leap second so the kernel applies the leap second jump, or may apply the jump itself if its configuration allows it to behave that way and it’s being run with enough privileges. Anyway, you probably won’t decide that as the operating system vendor will probably ship you the NTP daemon with a working configuration in that regard, so you only have to customize the reference server(s) if any or some security related parameters about who can query or modify the daemon state.

So, hopefully, if you have a Unix system synced with NTP, your computer will most likely receive a leap second warning before it happens and will make the clock jump for you without doing anything in the proper moment at midnight.

A few remaining questions

Who provides the original leap second warning? Many infallible time sources in NTP are connected to a GPS clock. This clock receives the current time from the satellites and the known offset between GPS time and UTC time. This difference will change after a leap second happens. They will also receive a leap second warning some time before it happens. Moreover, a properly configured NTP server (either for public use or for internal corporate or home use) should have its software or firmware updated so it knows in advance the leap second is going to take place, without having to wait for the upcoming modified UTF offset message from the satellites or the leap second warning. For example, if you use Linux and the NTP server from ntp.org, you can download a file from the NIST FTP server with an up-to-date list of leap seconds, save it to your hard drive and configure the NTP daemon with a “leapfile” directive in the configuration file to point the daemon to the list of known leap seconds. You can even do this if you don’t trust your upstream servers are going to properly propagate the leap second warning. More information can be found in the ntp.org wiki.

What if my computer is turned off before the leap second warning and turned on after it has happened? Nothing to worry about. At any moment the NTP daemon detects you’re off by about one second, it will step the clock to the proper time without affecting the drift calculations. For example, the manpage for ntpd in my system states the step will happen if it detects you’re off by more than 128 milliseconds.

Experiment for the future: be playing a video or a song in your computer in the moment the leap second happens and see if it stalls for one second or some other weird thing happens, to test your movie or audio player is able to detect the leap second. File a bug if it’s not. :)

As you can see, keeping your computers in sync is really easy even in the event of a leap second. It becomes a bit trickier when the system has several different devices of different brands and ages and each one of them takes the time reference from a different source, but most home computer owners don’t need to do anything special, and most people simply won’t care about the leap second anyway.

A few references for more information

2010-12-19

Frustration with Yahoo! and RFC 5965

In the past, I mentioned several times that I don’t receive spam. It’s not completely true, but it’s very true. My normal level of spam messages is about one each month. I have achieved this by using approaches like Yahoo! AddressGuard, and translating that same scheme to GMail when I moved to GMail. My e-mail address is publicly accessible by anyone and exposed in this blog (“Contact me” in the right column). If you take a look at the source of that page, you’ll notice how I wrote it so you can copy-paste the address to your e-mail client while keeping spammers at bay.

When one of my addresses is compromised, I can change it right away, yet I prefer not to change them so often to avoid annoying people wanting to contact me from time to time. For this reason, when I receive a spam message to one of my accounts, the first thing I do is reporting it. If I received 100 spam messages a day, I couldn’t do this, but as I receive one a month, I don’t mind spending 5 minutes reporting the message. Only if the spam doesn’t stop and apparently increases, I change that address.

Reporting a message is quite straightforward, but I don’t have it automated. I could, but I haven’t bothered yet. Basically, I view the source of the message and look for “Received from” headers. I find the first one, in chronological order, that appears to be a valid public SMTP server that people should trust. Then, I run “whois” on that IP address and find the ISP or organization owning that network block, and report the message to the abuse address they provide as part of the “whois” reply. If they don’t provide an abuse address, usually I send it to the technical contact that appears in the “whois” reply, and also to the “abuse@” address of the company’s main domain, just in case it actually exists and is being read.

In my e-mail client I have a template to report spam. I fire a new message from the template, fill the “To” field with the addresses just mentioned and copy-paste the full spam message source at the end of my message, which consists of a very brief message to the person that could be reading it, saying I received a spam message apparently coming from their network block. As I said, this takes 5 minutes and could be automated.

Sometimes, the spam message comes from a Yahoo! account, using their servers, and I follow the same procedure, emailing abuse@yahoo.com. This is the case of the latest spam message I received, two days ago. I proceeded to report the spam as I always do and received a reply from Yahoo! with the following contents.

Thank you for your email, but this address now only accepts messages in

Abuse Reporting Format (http://tools.ietf.org/html/rfc5965)To report abuse manually (or to get help with security or abuse related

issues), please go to Yahoo! Abuse:

http://abuse.yahoo.comFor questions about using Yahoo! services, please visit Yahoo Help:

http://help.yahoo.comThank you,

– Yahoo! Customer CareNote: Please do not reply to this email as replies will not be answered.

A quick Google search revealed a few people upset by this. Apparently, Yahoo! is applying this policy since the beginning of December. The RFC they mention in that first paragraph is from August. People are upset for several reasons. The RFC is so recent there are almost no tools to handle or create reports in that format yet. For that reason, they are cutting people out of the loop. The second option is going to their website and reporting the spam message there. This means two things: that you have to treat Yahoo! in a special way when reporting spam and that you have to be annoyed by their web form to report spam. It’s annoying because the landing page has no direct form to report spam. As of today, you have to click on “I want to report spam” (this opens a new window or tab), then copy, on separate locations, the full email headers on one box, and the message contents on another one. Fantastic. So you can’t simply upload the message for paste the full contents to a form. No, no. You have to carefully select the message headers first, then copy them, then paste them on the form, then copy the message body, then paste it on the form, then pass a captcha.

I was also a bit upset by this, so I read RFC 5965 a little bit. It looked simple if you only wanted to fill the required parts, and had a simple report example at the end, so I searched for a tool that would convert an e-mail message to a report based on these parameters. I didn’t find any tool immediately. I realized Python has a very comprehensive and easy to use package to handle e-mail messages, so I investigated a little bit and decided to spend the rest of the evening trying to create such a tool. The result has been uploaded to github as the spamreport repository, but don’t try to use it immediately. I have some bad news. Python’s e-mail library is amazingly simple and, in the end, including all the code to check program options and such, the program is exactly 100 lines long, so it’s very short and straightforward, and should work perfectly. However, it doesn’t work.

I have tried submitting an abuse report to Yahoo! in that format several times, making minor changes to the code, tweaking my program here and there, and every time the report has been rejected. Yahoo! does not explain why the report is being rejected in their reply, which, by the way, is a bit against the RFC itself. Section 4:

When an agent that accepts and handles ARF messages receives a

message that purports (by MIME type) to be an ARF message but

syntactically deviates from this specification, that agent SHOULD

ignore or reject the message. Where rejection is performed, the

rejection notice (either via an SMTP reply or generation of a

DSN) SHOULD identify the specific cause for the rejection.

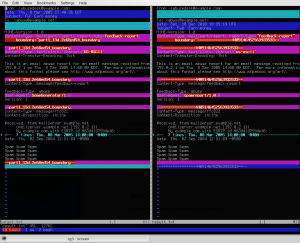

As they are replying via SMTP with a rejection, they SHOULD explain the reason but they’re not doing it, and that’s why this is so frustrating. At first, I thought GMail was mangling the reports so I sent one to my own accounts at another e-mail provider, and it came out unmangled on the other end. GMail is not manipulating the reports. Just so you get an idea, here’s a screenshot from a test case. I took the simple report example they give in the RFC and attempted to create a similar report with my tool, using the same spam message and the same notification text, just to see what the differences were. Click on the image to view it in full size.

As you can see, apparently the only differences are:

- The header order for “To”, “From”, “Date” and “Subject” differs (this should be irrelevant).

- The words “feedback-report” are quoted in my output because Python writes them that way. This should also be irrelevant.

- The MIME boundary markers differ (irrelevant and are generated randomly for each message).

- The words “us-ascii” are in lowercase in my output. Python writes them in lowercase even if I put them in uppercase, and this should be irrelevant too.

- The User-Agent string changes (obviously).

Yet the reports are being rejected by Yahoo! I’m puzzled at this moment and won’t tag the release as 1.0.0 until the reports are accepted or proved to be correct, but I don’t know what more to check. I suspect there’s a minor flaw I haven’t detected. If you spot it, please let me know. The code is on the net.

2010-11-20

Disabling antialiasing for a specific font with freetype

In the following paragraphs I’ll describe how to disable antialiasing for a specific font with freetype. The individual pieces that need to be put together to achieve this are well documented, but a Google search didn’t turn up many relevant results regarding this specific topic, so I hope anyone else searching for quick instructions will find the following text useful and in the first page of a web search.

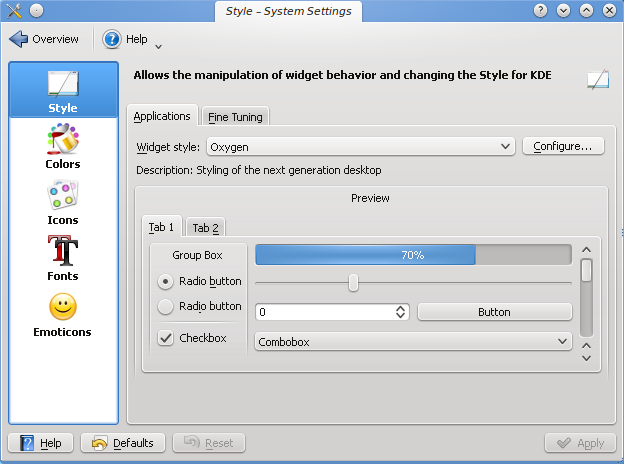

As you may know, freetype is normally configured by creating files in /etc/fonts/conf.avail and creating symlinks to those files in /etc/fonts/conf.d. Normally, separating each configuration parameter or parameter group to individual files lets you easily enable and disable specific font-rendering features by creating and destroying symlinks. One of these configurable features usually enabled in any distribution is to parse the file ~/.fonts.conf to allow every user to set their own font rendering parameters. For example, when KDE configures the font rendering features from the “System Settings” panel, it overwrites your ~/.fonts.conf. If you want to disable antialiasing for a specific font in freetype, you can either create a new config file in /etc/fonts/conf.avail and link to it in /etc/fonts/conf.d, setting it for any user, or adding the setting in your own ~/.fonts.conf. If you do the later, be sure to back file up somewhere, because fiddling with the font settings in your Destkop Environment may overwrite the file.

Going to specific details, I recently installed the Tahoma font from my Windows installation and wanted to use it with the bytecode interpreter and without antialiasing in the GUI, so it would look like this:

However, the rest of the fonts look ugly with those settings, so I wanted to disable antialiasing for the Tahoma font only, and only in sizes of 10 points or less. For bigger sizes, antialiasing would be enabled. Long story short, here are the settings that need to be integrated into your personal ~/.fonts.conf or put in an individual file in /etc/fonts/conf.{avail,d}. I’ll explain the contents next.

<?xml version='1.0'?>

<!DOCTYPE fontconfig SYSTEM 'fonts.dtd'>

<fontconfig>

<match target="font">

<test qual="any" name="family">

<string>Tahoma</string>

</test>

<!-- pixelsize or size -->

<test compare="more_eq" name="size" qual="any">

<double>1</double>

</test>

<test compare="less_eq" name="size" qual="any">

<double>10</double>

</test>

<edit mode="assign" name="antialias">

<bool>false</bool>

</edit>

<edit name="autohint" mode="assign"><bool>false</bool></edit>

</match>

<match target="font">

<test qual="any" name="family">

<string>Tahoma</string>

</test>

<!-- pixelsize or size -->

<test compare="more_eq" name="pixelsize" qual="any">

<double>1</double>

</test>

<test compare="less_eq" name="pixelsize" qual="any">

<double>14</double>

</test>

<edit mode="assign" name="antialias">

<bool>false</bool>

</edit>

<edit name="autohint" mode="assign"><bool>false</bool></edit>

</match>

</fontconfig>

I don’t want to go into specific details about the rules above. There is an XML header that needs to be present in any configuration file, and it contains a “fontconfig” section. Inside that section, you can put any number of “match” sections among other things, and we need two. One specifies the rules in terms of point size and another one in terms of pixel size. Both are needed for some reason.

The matches look for fonts named Tahoma and disable antialiasing and autohinting for them in some specific sizes. The exact point and pixel sizes depend on your X server and/or Xft settings. Most people set the DPI value to 75, 96 or 100. In KDE, you can override the current setting from the style configuration window. DPI stands for “Dots Per Inch”. In this case, pixels per inch. Normally it should really match your monitor. That is, if you have a 22″ screen with a specific resolution in pixels, you’d specify a DPI setting that would match the real DPI. However, like I said, most people use 75, 96 or 100 (I set it to 96 myself) and it DOES NOT match the real DPI. Depending on the DPI setting, your fonts will look bigger or smaller at the same size in points. In my case, I was interested in sizes lower than 10. Hence the match you can read above.

To write the pixel size match you need to calculate the equivalent of those point-values in pixels. This is easily calculated knowing two constants: the DPI value you’re currently using and knowing that an inch has exactly 72 points. So the equivalent in pixels of a 10-point distance in a 96 DPI screen is the following:

10 points, in inches: 10 / 72 = 0.1388

With 96 pixels per inch, those are: 0.1388 * 96 = 13.33, or 14 pixels rounding the number up, which is what you see in the config file I pasted above.

2010-11-06

youtube-dl has moved to github.com

Some days ago, youtube-dl, my most popular project, moved from being managed using Mercurial at bitbucket.org to being managed using Git at github.com. Since the move, I’ve been wanting to write something about it. I’ve also been wanting to rewrite the program partly or completely every time I look at its source code, but that’s a different matter. Back to the main topic.

I should start by apologizing to anyone who thinks this is a bad move either because they may have to rebase all their work in a new repository, bringing all their changes back, or simply registered at bitbucket.org to follow the project. It currently has 17 forks and 100 followers, and I’m pretty sure some of them registered there just to follow youtube-dl, and the move to github.com is, if anything, a problem because they would have to create an account somewhere else to continue following the project. Again, apologies to anyone for whom the move has no practical aspects.

That said, I’d like to explain why I made the move. You may recall I wrote an article some time ago about Mercurial vs. Git. Apart from explaining what I considered were the main differences between the two, I also wanted to express my indecision about which one was better. While I think Mercurial is and was great, the balance has been leaning towards Git for some time now, and I tend to use Git for all my personal projects. Many of the reasons, if not all of them, have been expressed by other people in the past. It’s a good moment to quote a very well known blog post from Theodore Tso, written in 2007 when he was still planning to migrate e2fsprogs to Git from Mercurial:

The main reason why I’ve come out in favor of git is that I see its potential as being greater than hg, and so while it definitely has some ease-of-use and documentation shortcomings, in the long run I think it has “more legs” than hg, […]

I think that paragraph describes with great accuracy what I think too. In the medium and long run, Git’s problems almost vanish. Its documentation was a bit poor back then, but people have been writing more and more about Git and there are a few very good resources to learn its internals and basic features. Furthermore, once you have a simple idea about its internals and use it daily, you no longer need that much documentation. If you’re not sure how to do something, chances are a simple web search will tell you how to do what you wanted to achieve.

Also, as many people know, Mercurial was and is mostly about not modifying the project’s history, while Git has a lot of commands that directly modify the project’s history. With time, I’ve come to realize that modifying the project’s history is simply more practical in many cases and in a range of situations it leads to less confusion. In my day job, we are slowly moving from CVS to Subversion to manage the sources of a very old and important project, which exists since about 1984. At the same time, we are modifying our work flow here and there to take advantage of Subversion, and we’re heavily using branching and merging despite the fact that’s not one of Subversion’s greatest strengths, as you may know. That’s giving us some problems and it’s amazing how many times I caught myself thinking “this would be much easier if we were using git, because we would simply do this and that and job done”. Many of those actions would modify the project’s history and clean it up. I repeat, in real situations with a lot of people working on something and not doing everything exactly as it should be done, it’s only a matter of time that you miss a Git feature.

The only thing I don’t like about Git is its staging area. From a technical perspective, the staging area makes a lot of sense, and you can build many neat features based on it. However, one thing is having a staging area and a second thing is exposing it to end users. I think you can have a staging area and all the features it provides while hiding it from users in their most common work flows. Still, it’s something you get used to and everybody knows that, when your project is a bit mature, you spend way more time browsing the source code, debugging, running it and testing it than actually committing changes to the source tree. The staging area is not a big issue and “git commit -a” covers the most common cases.

Apart from Git itself, the move was partly motivated by the site, github.com. When I started using bitbucket.org I liked it a bit more than github.com, but things have changed slowly. github.com fixed a rendering bug that hid part of project top bar, got rid of its Flash-based file uploader and got an internal issue tracker with a web interface that works really really well. The site is very nice and the “pages” feature, that allows you to set up a simple web page for the project, is still not provided by bitbucket.org as far as I know. In addition, with the arrival of Firesheep, it quickly moved to using SSL for everything. It’s fantastic.

bitbucket.org was recently bought by Atlassian and their plans are indeed better. For me, however, the number of private repositories and private collaborators is not an issue, because all the projects I host on github.com are public. Still, it’s fair to mention their plans because it could be a deciding factor for some people.

I wouldn’t like to close this article without mentioning the big improvement that both sites bring to the typical free and open source software developer. I still host a few projects on sourceforge.net, and I can tell you I’m not going back to it despite the great service they have provided for years for which I thank them sincerely.

It’s been months since I last used it so I apologize if things have changed without me noticing, but back then it was very hard to get your code on sourceforge.net. You didn’t perceive it was hard because there was no github.com. Once you try github.com or bitbucket.org, you realize how much the process can be simplified. Two key aspects to note. First, the project name doesn’t have to be unique. It only needs to have a unique name among your own projects, which is much easier and simplifies choosing the project name a lot. Second, once the project is created and has a basic description, without filling any form and without having to wait for anything, you are only a few commands away from uploading your code to the Internet. It can literally take less than 5 minutes to create a project and have your code publicly available, and that’s fantastic and motivating. You don’t need to find time to upload your code or thinking if the process is worth it for the size of the project. You simply do it. That’s good news for everyone.

Let me finish by apologizing again to anyone for the inconveniences created by the move. I sincerely hope this will remain the project location for many years to come.